A fund administrator recently confessed on LinkedIn that AI’s promise of painless reconciliations and faster closes is tempting, but the idea of a rogue model spilling investor data “keeps me up at night.” They’re not alone. AI is outpacing the fund-admin playbook. Nine out of ten alternative managers already rely on machine-learning for tasks like trade reconciliation and AML screening, yet only 24 percent of live generative-AI projects in financial services have any dedicated security layer. The gap is expensive. In 2025, 73 percent of enterprises reported at least one AI-linked breach, each costing an average of US $4.8 million.

For administrators, those numbers translate into blunt operating risk. If account-level cash flows, investor K-1s, or illiquid-asset valuations leak, regulators and LPs will treat the incident exactly like any other data breach, except now the attack surface is a model you don’t fully control. In short, AI can streamline the back office, but only if its built to stop keeping people like our sleepless fund administrator awake at night.

Generative AI can Turn an Ordinary Slip-up into a Potential Headline

Bring a large-language model into the back office and you’re effectively hiring a tireless junior analyst who handles all your grunt work, yet if not boxed in correct, shouts every secret across the room. In one survey, 38 percent of employees admitted they had pasted confidential material into public chatbots, a habit that can leave NAV spreadsheets and investor tax data lingering on someone else’s servers. Samsung learned the hard way in 2023. A single copy-and-paste of proprietary code landed in the open, and the episode spread faster than any recall memo.

The technology itself isn’t always on your side, either. A memory glitch last March briefly let ChatGPT users glimpse each other’s prompts and payment details, proof that one corrupted cache can flatten tenant isolation across a SaaS stack. Attackers smell the opportunity. OWASP now ranks “prompt injection” as the top LLM threat, and a bogus “ChatGPT” browser extension hoovered up 40,000 Facebook logins before it was yanked. Even the plumbing behind retrieval-augmented generation can spring a leak. Researchers recently found dozens of unprotected vector databases broadcasting raw corporate files. For fund administrators, the lesson is less about mastering every acronym and more about recognizing that AI is another privileged endpoint hovering over accounting tables, AML watchlists, and investor PII. Treat it with the same skepticism—and budget for the same consequences—because research still pegs the average insider incident at a cool $17.4 million.

Security and Privacy in AI Have Come a Long Way

Eighteen months ago, trusting an AI tool with client data was often like letting a stranger borrow the office keys. Today the locks have changed. Enterprise-grade versions of ChatGPT, Azure OpenAI, and Claude arrive with privacy switched on by default. Chats auto-delete in about a month, every byte is encrypted from keyboard to cloud, and you can fence the whole system inside your own network—or even inside an EU-only zone—simply by ticking a box. In other words, the same governance muscles you already flex for trade capture and NAV reporting now apply to generative AI.

The underlying plumbing has caught up, too. Think of it as moving the model into a vault. Its calculations happen inside tamper-proof hardware and are surrounded by software “guardrails” that strip out personal details and shut down suspicious prompts before trouble starts. The result is a tool that can draft statements, comb through reconciliation breaks, and surface anomalies without ever leaking a single row of investor data.

Even collaboration, once a sticking point, no longer requires blind trust. New techniques let multiple institutions train fraud-detection models together while keeping their raw transactions to themselves. This is a compliance dream that’s already backed by clear audit trails. Bottom line is, if you insist on the enterprise tier and keep your usual security checklist handy, adopting AI is less a leap of faith and more a routine procurement decision. It can be that very thing that can streamline the back office without adding sleepless nights.

Why FundCount’s “Any-location” Deployment Matters

Over the last three years, most back-office accounting vendors have pushed clients toward multi-tenant SaaS. Allvue, for example, markets its platform as “cloud-based scalability to grow with your firm”—no mention of an on-prem server install. By contrast, only a handful of systems still let a fund administrator decide exactly where the application and its database will live. SS&C Advent’s Geneva continues to support both cloud and on-premise builds, and FIS’s Private Capital Suite (Investran) offers a hybrid model. But those implementations typically run in a public-cloud tenancy managed by the vendor, with limited scope for client-side hardening or bespoke key management.

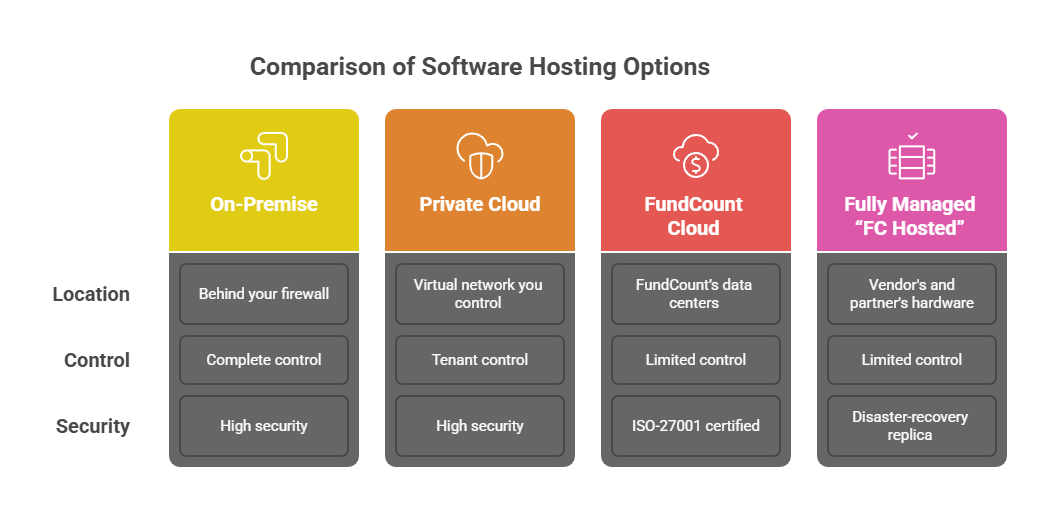

FundCount takes a stricter stance on data custody. Its single-client architecture can be deployed four ways:

- On-premise

The software runs on servers behind your own firewalls; the database never leaves the building. - Private cloud

You host the stack in a virtual network you control (e.g., AWS, Azure, or a regional provider), keeping all keys, storage, and IAM under your tenant. - FundCount Cloud

Dedicated instance in FundCount’s ISO-27001-certified data centers, segregated at the VM and database layers (no multi-tenant schema sharing). - Fully managed “FC Hosted”

The vendor and its partner Digital Edge supply the hardware, patching, 24×7 ops, and a real-time disaster-recovery replica located more than 100 miles from the primary site.

Because each option is a single-tenant build, administrators retain sole control over encryption keys, retention windows, and audit policies, whether the server sits in their own rack or in FundCount’s private cloud. For firms operating under jurisdictional data-residency rules—or those simply unwilling to place investor PII in a multi-tenant SaaS—this flexibility can be the difference between a green-lit implementation and a non-starter.

Charting a Safe Path Forward

AI is already reshaping the back office, and the choice is no longer whether to use it but how to use it without compromising client trust. The good news is that enterprise-grade platforms now offer privacy features sturdy enough for even the most conservative compliance teams, while single-tenant deployments like FundCount’s keep data custody squarely in your hands. Combine that architecture with the same controls you apply to reconciliation systems—clear retention policies, strong keys, airtight audit trails—and AI becomes a disciplined member of the operations team rather than a wildcard on the risk register.

Fund administrators that insist on these guardrails will reap the upside first: faster closes, cleaner books, and staff freed from manual checks. Those that hesitate may find themselves explaining to investors why yesterday’s fears kept today’s efficiencies out of reach. The path forward is ready; it just needs a firm step.